Jasper Research Releases 3 New Models to Improve Image Output for Marketers

Jasper releases three innovative new Flux ControlNets to reinforce commitment to elevating all marketing (and all marketers) with the power of AI.

When it comes to generative AI, the Marketing department is unique. Gen AI that works for other enterprise functions often breaks when put into practice for Marketing. Why? For one thing, marketing has a really high bar. AI outputs for marketing must be perfectly on brand — pixel perfect, quite literally.

Jasper is the only company in the world with proprietary marketing-specific AI models built for both image and text generation, giving marketers the quality outputs they need to succeed in creating campaigns at scale.

Our industry-leading Jasper Research team has been hard at work, and today, we’re proud to share we’ve released three innovative new Flux ControlNets that reinforce our commitment to elevating all marketing and all marketers with the power of AI.

Understanding Text-to-Image Models & ControlNets

Before we get into the specifics of Jasper’s new ControlNets, let’s get on the same page about what these two things are.

Text-to-image models are generative models that create visual content (images) based on textual descriptions (called prompts). These models leverage deep learning techniques, particularly diffusion models, to understand the semantics of the text input and generate relevant images.

TL;DR: The models that take text and create images.

ControlNets are models designed to enhance the controllability of pre-trained diffusion models, particularly for text-to-image or image-to-image generation tasks. They can take various additional inputs, such as edge maps, depth maps, segmentation maps, or even simple sketches. These inputs guide the image generation process, allowing for more detailed and specific outputs.

TL;DR: Models that guide image generation models to more precisely follow specific instructions or styles.

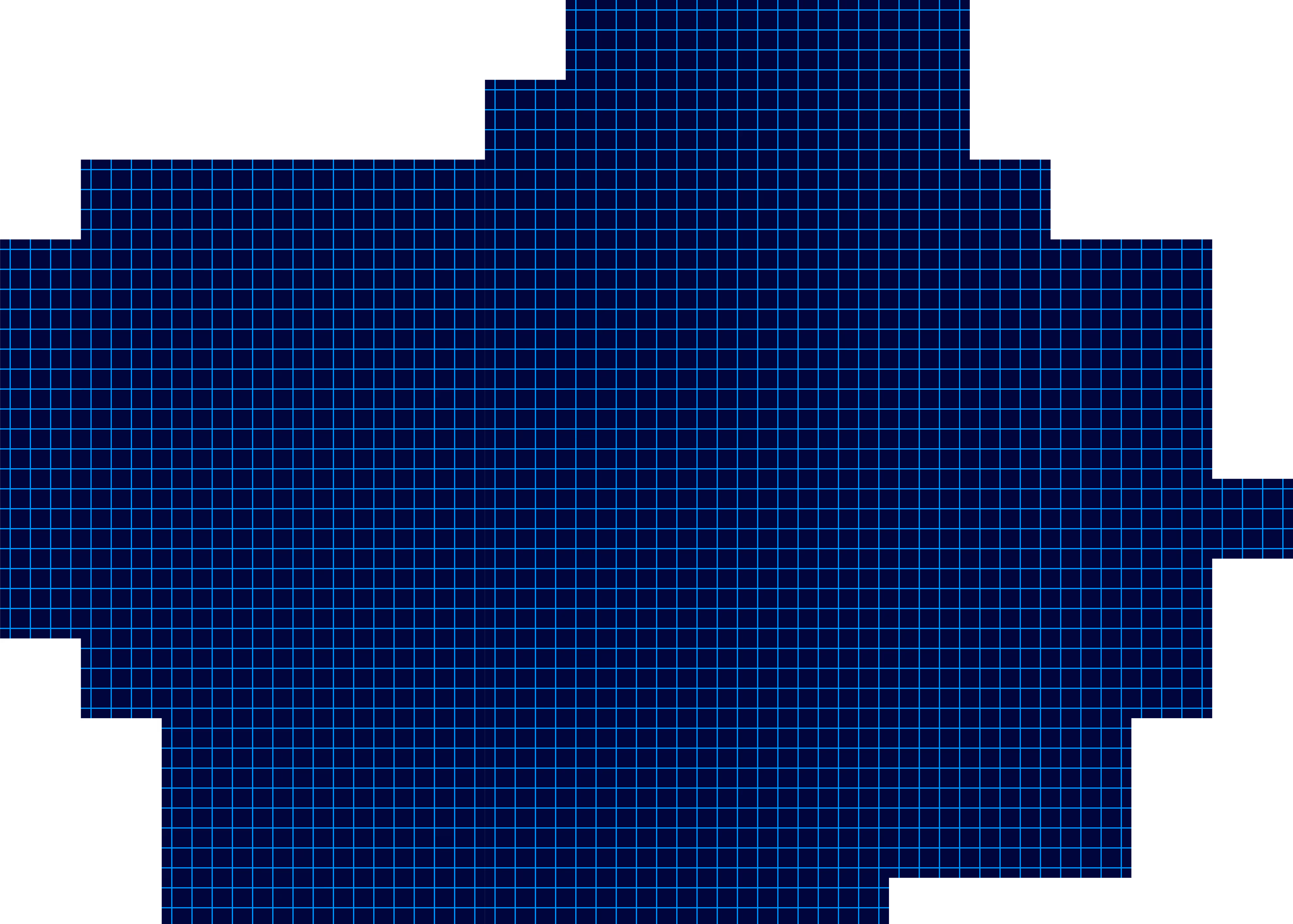

To give you a real-world example, here’s what a ControlNet depth map looks like:

.png)

Today’s Release: Three New Flux ControlNets to Provide Even More Precise and On-Brand Image Generation

Today, the Jasper research team released three new Flux ControlNets, which allow you to control the generation from Flux-dev with either a depth map, a surface normal map, or a low-resolution image. Let’s look at each in detail and, more importantly, the functionality they provide to marketers.

1. Depth ControlNet

What it is: A Depth ControlNet (or a depth map) focuses on where items are placed relative to each other within an image. You can see in the examples below that Depth ControlNets use greyscale to show lighter greys for objects that are closer and darker greys for objects that are further away.

What we built: Jasper's research team took existing open source models and layered them in with our internal depth estimator (link) to train the ControlNet. This created a more reliable and higher-performing image generation model.

What it means for marketers: This is particularly valuable when you want to place a new object into an existing image. For example, if you're a B2C marketer and you have a product image that you want to place in different backgrounds, these improvements will give you higher quality and more accurate on-brand image outputs at scale.

Prompt: a statue of a gnome in a field of purple tulips

Theirs

.png)

Ours

.png)

2. Surface Normal Maps

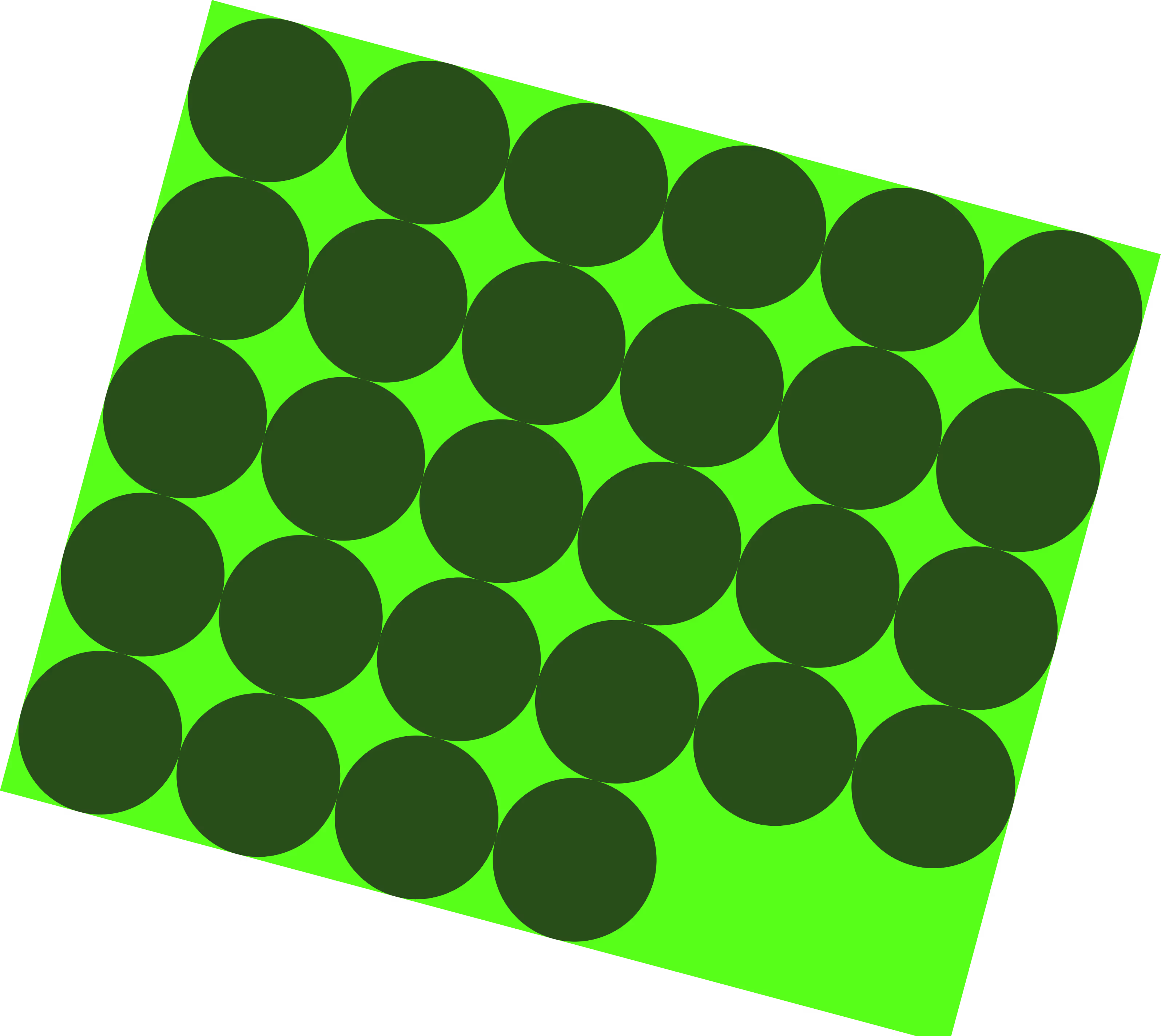

What it is: Where the Depth Maps provide information about where items are placed within an image, Surface Normal Maps provide information about the orientation of each pixel. Said another way, they represent the orientation of surfaces in a 3D scene.

What we built: For this ControlNet, Jasper’s Research Team relied on the surface normal estimator they developed internally (link) and combined it with an open-sourced one (the Boundary Aware Encoder) to create an even more robust model.

Below is an example of Surface Normal Maps and their corresponding images:

What it means for marketers: Combining our Depth Maps and Surface Normal Maps allow for powerful and accurate lighting adjustments in images. Think about a B2C marketer adding a piece of furniture to an existing room backdrop and having the shadows be accurate with no additional or complex editing. Or a B2B marketer who needs to update a speaker’s headshot for a campaign promotion and having the lighting direction on their face look natural. With Jasper’s image editing models and powerful brand guidelines, all of these edits can also be done at scale.

3. Image Upscaler

What it is: The model takes a low-resolution image as input and outputs a restored and upscaled version of the same image. In this case, the image generation process is not conditioned on a prompt, but solely on the low-resolution image.

What we built: Jasper’s Research Team trained our Upscaler ControlNet on a synthetic complex data degradation scheme. Basically they trained the model using a method that created low-resolution images by intentionally degrading real images. This involved adding noise (like Gaussian or Poisson), blurring, and JPEG compression to the original images. Through this process they were able to build an even more robust upscale model.

What it means for marketers: With this functionality, marketers can upscale images to improve resolution at scale. This is important, for example, for marketers who have existing assets that may need to be updated to fit a new format or dimension — for example an existing campaign asset that was used for a lower resolution channel (Google Display) and now needs to be used on a higher resolution format (LinkedIn Ads). It can also be extremely powerful for eCommerce marketers who are tasked with maintaining and updating hundreds or thousands or even millions of product images.

Below, you can see the before and after of what this looks like in action.

More Gen AI Innovations for Marketers and The Open Source Community from Jasper

Jasper is committed to advancing research and building proprietary, marketing-specific models to elevate all marketing and all marketers with the power of AI. These models in combination represent a meaningful shift forward in image editing and creation at scale, tuned specifically to the needs of B2C and B2B marketers.

For the open-source community, Jasper's latest release of three Flux ControlNets marks a significant step forward in text-to-image generation, reinforcing our dedication to open-source innovation.

And it’s fair to say the Open Source community is pretty excited about our new models as well: https://www.youtube.com/watch?v=L9q3T-dYn5U

Useful links

If you are interested in these models you can find the open weights at the following links:

- ControlNet Depth: https://huggingface.co/jasperai/Flux.1-dev-Controlnet-Depth

- ControlNet Surface Normals: https://huggingface.co/jasperai/Flux.1-dev-Controlnet-Surface-Normals

- ControlNet Upscaler: https://huggingface.co/jasperai/Flux.1-dev-Controlnet-Upscaler

If you want to try the models, we also released a gradio demo for the image upscaling tasks that is available at the following link:

Gradio Demo: https://huggingface.co/spaces/jasperai/Flux.1-dev-Controlnet-Upscaler

More of the latest & greatest

McKinsey on the Future of Personalization

Eli Stein, Partner at McKinsey, breaks down why true personalization was stuck in “Mad Libs” mode, how AI shifts the paradigm, and what it takes to build full‑journey personalization at scale.

August 4, 2025

|

Megan Dubin

Jasper Pixel-Perfect Imagery: Driving Advantage for Enterprise Marketers

Learn how Jasper delivers scalability and precision in visual content generation not achievable with generic AI alternatives.

July 31, 2025

|

Damien Henry

LinkedIn's Steve Kearns on AI, Brand, and the B2B Marketer’s Moment

Steve Kearns, Global Head of Content at LinkedIn Ads, shares how AI has brought human connection even further to the forefront for B2B marketing.

July 28, 2025

|

Esther-Chung